Hubble集成OpenTelemetry

前置条件

部署 Kubernetes集群(z-k8s) (你也可以 minikube )

在扩展etcd环境安装cilium (也可以简化为 cilium快速起步 )

Cilium Hubble可观测性 部署完成

部署说明

本文实践在 Cilium 网络环境部署 OpenTelemetry 以及采用 Jaeger分布式跟踪系统 和 cert-manager: X.509证书管理 ,同时采用 CiliumNetworkPolicy 和 CiliumClusterwideNetworkPolicy 来激活DNS和HTTP可视化的部署简单demo:

第一个

OpenTelemetryCollector配置会部署Hubble adaptor和配置Hubble receiver来访问每个节点的L7流数据,然后将跟踪数据写入到 Jaeger分布式跟踪系统第二个

OpenTelemetryCollector将部署上游 OpenTelemetry 发行版来作为demo应用的sidecar

基本设置

安装 cert-manager: X.509证书管理 (OpenTelemetry operator依赖):

kubectl apply -k github.com/cilium/kustomize-bases/cert-manager

执行以下命令确保 cert-manager 完全就绪:

(

set -e

kubectl wait deployment --namespace="cert-manager" --for="condition=Available" cert-manager-webhook cert-manager-cainjector cert-manager --timeout=3m

kubectl wait pods --namespace="cert-manager" --for="condition=Ready" --all --timeout=3m

kubectl wait apiservice --for="condition=Available" v1.cert-manager.io v1.acme.cert-manager.io --timeout=3m

until kubectl get secret --namespace="cert-manager" cert-manager-webhook-ca 2> /dev/null ; do sleep 0.5 ; done

)

输出显示:

deployment.apps/cert-manager-webhook condition met

deployment.apps/cert-manager-cainjector condition met

deployment.apps/cert-manager condition met

pod/cert-manager-7b4f4986bb-6sdlt condition met

pod/cert-manager-cainjector-6b9d8b7d57-5fw2r condition met

pod/cert-manager-webhook-d7bc6f65d-25kwr condition met

apiservice.apiregistration.k8s.io/v1.cert-manager.io condition met

apiservice.apiregistration.k8s.io/v1.acme.cert-manager.io condition met

NAME TYPE DATA AGE

cert-manager-webhook-ca Opaque 3 115s

部署 Jaeger分布式跟踪系统 operator:

kubectl apply -k github.com/cilium/kustomize-bases/jaeger

输出显示:

namespace/jaeger created

customresourcedefinition.apiextensions.k8s.io/jaegers.jaegertracing.io created

serviceaccount/jaeger-operator created

role.rbac.authorization.k8s.io/jaeger-operator created

clusterrole.rbac.authorization.k8s.io/jaeger-operator created

rolebinding.rbac.authorization.k8s.io/jaeger-operator created

clusterrolebinding.rbac.authorization.k8s.io/jaeger-operator created

deployment.apps/jaeger-operator created

配置一个内存后端的 Jaeger分布式跟踪系统 实例:

cat > jaeger.yaml << EOF

apiVersion: jaegertracing.io/v1

kind: Jaeger

metadata:

name: jaeger-default

namespace: jaeger

spec:

strategy: allInOne

storage:

type: memory

options:

memory:

max-traces: 100000

ingress:

enabled: false

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ""

EOF

kubectl apply -f jaeger.yaml

部署 OpenTelemetry operator:

kubectl apply -k github.com/cilium/kustomize-bases/opentelemetry

配置Hubble receiver 和 Jaeger exporter:

cat > otelcol.yaml << EOF

apiVersion: opentelemetry.io/v1alpha1

kind: OpenTelemetryCollector

metadata:

name: otelcol-hubble

namespace: kube-system

spec:

mode: daemonset

image: ghcr.io/cilium/hubble-otel/otelcol:v0.1.1

env:

# set NODE_IP environment variable using downwards API

- name: NODE_IP

valueFrom:

fieldRef:

fieldPath: status.hostIP

volumes:

# this example connect to Hubble socket of Cilium agent

# using host port and TLS

- name: hubble-tls

projected:

defaultMode: 256

sources:

- secret:

name: hubble-relay-client-certs

items:

- key: tls.crt

path: client.crt

- key: tls.key

path: client.key

- key: ca.crt

path: ca.crt

# it's possible to use the UNIX socket also, for which

# the following volume will be needed

# - name: cilium-run

# hostPath:

# path: /var/run/cilium

# type: Directory

volumeMounts:

# - name: cilium-run

# mountPath: /var/run/cilium

- name: hubble-tls

mountPath: /var/run/hubble-tls

readOnly: true

config: |

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:55690

hubble:

# NODE_IP is substituted by the collector at runtime

# the '\' prefix is required only in order for this config to be

# inlined in the guide and make it easy to paste, i.e. to avoid

# shell subtituting it

endpoint: \${NODE_IP}:4244 # unix:///var/run/cilium/hubble.sock

buffer_size: 100

include_flow_types:

# this sets an L7 flow filter, removing this section will

# disable filtering and result all types of flows being turned

# into spans;

# other type filters can be set, the names are same as what's

# used in 'hubble observe -t <type>'

traces: ["l7"]

tls:

insecure_skip_verify: true

ca_file: /var/run/hubble-tls/ca.crt

cert_file: /var/run/hubble-tls/client.crt

key_file: /var/run/hubble-tls/client.key

processors:

batch:

timeout: 30s

send_batch_size: 100

exporters:

jaeger:

endpoint: jaeger-default-collector.jaeger.svc.cluster.local:14250

tls:

insecure: true

service:

telemetry:

logs:

level: info

pipelines:

traces:

receivers: [hubble, otlp]

processors: [batch]

exporters: [jaeger]

EOF

kubectl apply -f otelcol.yaml

然后检查collector作为 DaemonSet 正确运行:

kubectl get pod -n kube-system -l app.kubernetes.io/name=otelcol-hubble-collector

如果正常,会看到每个worker节点正常运行了如下:

NAME READY STATUS RESTARTS AGE

otelcol-hubble-collector-2g82m 1/1 Running 0 5m15s

otelcol-hubble-collector-46gtj 1/1 Running 0 5m15s

otelcol-hubble-collector-97pwj 1/1 Running 0 5m15s

otelcol-hubble-collector-qhzkn 1/1 Running 0 5m15s

otelcol-hubble-collector-xt7xl 1/1 Running 0 5m15s

现在可以检查日志:

kubectl logs -n kube-system -l app.kubernetes.io/name=otelcol-hubble-collector

现在就可以访问 Jaeger分布式跟踪系统 管理界面:

kubectl port-forward svc/jaeger-default-query -n jaeger 16686

部署podinfo demon应用

以下部署案例是为了验证前面部署的trace系统,提供一个DNS和HTTP跟踪演示

创建一个 demo 应用的名字空间:

kubectl create ns podinfo

激活 podinfo 应用的HTTP可视化以及所有DNS流量:

cat > visibility-policies.yaml << EOF

---

apiVersion: cilium.io/v2

kind: CiliumClusterwideNetworkPolicy

metadata:

name: default-allow

spec:

endpointSelector: {}

egress:

- toEntities:

- cluster

- world

- toEndpoints:

- {}

---

apiVersion: cilium.io/v2

kind: CiliumClusterwideNetworkPolicy

metadata:

name: dns-visibility

spec:

endpointSelector: {}

egress:

- toEndpoints:

- matchLabels:

k8s:io.kubernetes.pod.namespace: kube-system

k8s:k8s-app: kube-dns

toPorts:

- ports:

- port: "53"

protocol: ANY

rules:

dns:

- matchPattern: "*"

- toFQDNs:

- matchPattern: "*"

- toEndpoints:

- {}

---

apiVersion: cilium.io/v2

kind: CiliumNetworkPolicy

metadata:

name: http-visibility

namespace: podinfo

spec:

endpointSelector: {}

egress:

- toPorts:

- ports:

- port: "9898"

protocol: TCP

rules:

http:

- method: ".*"

- toEndpoints:

- {}

EOF

kubectl apply -f visibility-policies.yaml

提示信息:

ciliumclusterwidenetworkpolicy.cilium.io/default-allow created

ciliumclusterwidenetworkpolicy.cilium.io/dns-visibility created

ciliumnetworkpolicy.cilium.io/http-visibility created

podinfo应用是OpenTelemetry SDK检测,导出跟踪的一种方法是使用collector sidecar: 添加sidecar配置

cat > otelcol-podinfo.yaml << EOF

apiVersion: opentelemetry.io/v1alpha1

kind: OpenTelemetryCollector

metadata:

name: otelcol-podinfo

namespace: podinfo

spec:

mode: sidecar

config: |

receivers:

otlp:

protocols:

http: {}

exporters:

logging:

loglevel: info

otlp:

endpoint: otelcol-hubble-collector.kube-system.svc.cluster.local:55690

tls:

insecure: true

service:

telemetry:

logs:

level: info

pipelines:

traces:

receivers: [otlp]

exporters: [otlp, logging]

EOF

kubectl apply -f otelcol-podinfo.yaml

现在部署podinfo应用:

kubectl apply -k github.com/cilium/kustomize-bases/podinfo

检查部署和服务:

kubectl get -n podinfo deployments,services

输出显示:

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/podinfo-backend 2/2 2 2 81s

deployment.apps/podinfo-client 1/2 2 1 81s

deployment.apps/podinfo-frontend 1/2 2 1 81s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/podinfo-backend ClusterIP 10.106.77.139 <none> 9898/TCP,9999/TCP 81s

service/podinfo-client ClusterIP 10.102.155.20 <none> 9898/TCP,9999/TCP 81s

service/podinfo-frontend ClusterIP 10.99.183.236 <none> 9898/TCP,9999/TCP 81s

向应用发送压力

此时会看到终端输出一些trace信息,类似:

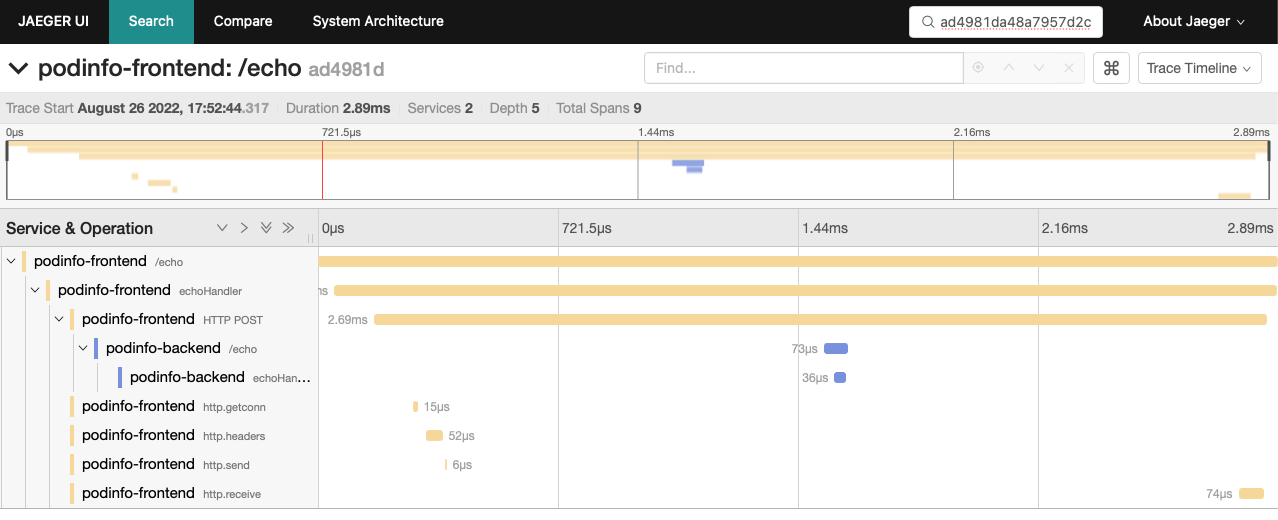

traceparent: 00-ad4981da48a7957d2c3ec1f7f722ba87-964601c0a0298a04-01

[

"Hubble+OpenTelemetry=ROCKS"

]

这里 ad4981da48a7957d2c3ec1f7f722ba87 就是 traceid

在 Jaeger分布式跟踪系统 交互界面可以根据这个 traceid 搜索到会话的跟踪信息: