配置Ceph iSCSI网关

完成 安装Ceph iSCSI 可以配置 Ceph Block Device(RBD) 块设备映射iSCSI

创建 rbd 存储池

gwcli需要一个名为rbd的存储池 可以创建一个任意命名的 Ceph Block Device(RBD) 存储池,就能够用来存储有关 iSCSI 配置的元数据,首先检查是否存在这个存储池:

ceph osd lspools

这里显示:

1 .mgr

2 libvirt-pool

其中 libvirt-pool 是我在实践 移动云计算Libvirt集成Ceph RBD 创建用于libvirt的RBD存储池,但是实际没有使用(原因是 Arch Linux ARM没有提供RBD驱动)。当时通过WEB管理界面创建的OSD存储池 libvirt-pool 已经验证并调整 pg_num = 32 (ceph.conf)

( 废弃 )执行以下命令创建名为rbd的存储池:

sudo ceph osd pool create rbd

sudo rbd pool init rbd

备注

这里我发现奇怪的问题,即使 /etc/ceph/ceph.conf 已经配置了:

osd pool default pg num = 32

osd pool default pgp num = 32

参数是按照WEB管理界面操作完成,但是依然执行命令报错:

Error ERANGE: 'pgp_num' must be greater than 0 and lower or equal than 'pg_num', which in this case is 1

目前暂时采用WEB管理界面创建存储池,待后续排查

( 废弃 )完成后再次检查 ceph osd lspools 可以看到名为 rbd 的存储池:

1 .mgr

2 libvirt-pool

3 rbd

备注

经过实践,我发现原英文文档写得有些歧义,其实只要是 Ceph Block Device(RBD) 存储池即可用于iSCSI target Gateway,所以我后面的实践最终采用的是 libvirt-pool

在每个 iSCSI 网关节点(我的实践案例采用

a-b-data-2和a-b-data-3),创建/etc/ceph/iscsi-gateway.cfg配置文件:

[config]

# Name of the Ceph storage cluster. A suitable Ceph configuration file allowing

# access to the Ceph storage cluster from the gateway node is required, if not

# colocated on an OSD node.

cluster_name = ceph

# Place a copy of the ceph cluster's admin keyring in the gateway's /etc/ceph

# directory and reference the filename here

gateway_keyring = ceph.client.admin.keyring

# API settings.

# The API supports a number of options that allow you to tailor it to your

# local environment. If you want to run the API under https, you will need to

# create cert/key files that are compatible for each iSCSI gateway node, that is

# not locked to a specific node. SSL cert and key files *must* be called

# 'iscsi-gateway.crt' and 'iscsi-gateway.key' and placed in the '/etc/ceph/' directory

# on *each* gateway node. With the SSL files in place, you can use 'api_secure = true'

# to switch to https mode.

# To support the API, the bare minimum settings are:

api_secure = false

# Additional API configuration options are as follows, defaults shown.

api_user = admin

api_password = admin

api_port = 5001

trusted_ip_list = 192.168.8.205,192.168.8.206

备注

配置了简单的API认证,必须同时启用 trusted_ip_list 否则无法管理

备注

trusted_ip_list 是每个iSCSI网关上的IP地址列表,用于管理操作,如 target 创建,LUN导出等。IP可以与将用于iSCSI数据的IP相同,例如READ/WRITE 命令到/从 RBD 映像,但建议使用单独的 IP。

在每个iSCSI网关节点,激活和启动API服务:

systemctl daemon-reload

systemctl enable rbd-target-gw

systemctl start rbd-target-gw

systemctl enable rbd-target-api

systemctl start rbd-target-api

这里我遇到启动 rbd-target-gw 服务的错误:

Dec 18 23:38:54 a-b-data-2.dev.cloud-atlas.io systemd[1]: rbd-target-gw.service: Scheduled restart job, restart counter is at 3.

Dec 18 23:38:54 a-b-data-2.dev.cloud-atlas.io systemd[1]: Stopped rbd-target-gw.service - Setup system to export rbd images through LIO.

Dec 18 23:38:54 a-b-data-2.dev.cloud-atlas.io systemd[1]: rbd-target-gw.service: Start request repeated too quickly.

Dec 18 23:38:54 a-b-data-2.dev.cloud-atlas.io systemd[1]: rbd-target-gw.service: Failed with result 'exit-code'.

Dec 18 23:38:54 a-b-data-2.dev.cloud-atlas.io systemd[1]: Failed to start rbd-target-gw.service - Setup system to export rbd images through LIO.

启动 rbd-target-gw 非常重要,后续 gwcli 都依赖API网关完成

我尝试重启了操作系统,发现重启后 rbd-target-gw / rbd-target-api 启动正常。看来是有相关性依赖

配置target

gwcli 可以创建和配置 iSCSI target 以及 RBD images,并且提供了跨网关复制配置的能力。虽然底层的工具如 targetcli 和 rbd 也能完成相应工作,但是只能用于本地处理,所以不要直接使用底层工具,而应该采用上层的 gwcli 。

在一个Ceph iSCSI网关节点,启动 Ceph iSCSI弯管命令,进行交互操作:

gwcli

备注

此时会进入一个类似文件系统的层次结构,你可以简单执行 ls 命令,可以看到结合了底层 iSCSI target 和 rdb 的树状结构:

/> ls /

o- / ................................................................... [...]

o- cluster ................................................... [Clusters: 1]

| o- ceph ...................................................... [HEALTH_OK]

| o- pools .................................................... [Pools: 3]

| | o- .mgr ............ [(x3), Commit: 0.00Y/46368320K (0%), Used: 1356K]

| | o- libvirt-pool ...... [(x3), Commit: 0.00Y/46368320K (0%), Used: 12K]

| | o- rbd ............... [(x3), Commit: 0.00Y/46368320K (0%), Used: 12K]

| o- topology .......................................... [OSDs: 3,MONs: 3]

o- disks ................................................. [0.00Y, Disks: 0]

o- iscsi-targets ......................... [DiscoveryAuth: None, Targets: 0]

进入

iscsi-targets创建一个名为iqn.2022-12.io.cloud-atlas.iscsi-gw:iscsi-igw:

/> cd iscsi-targets

/iscsi-targets> ls

o- iscsi-targets ..................... [DiscoveryAuth: None, Targets: 0]

/iscsi-targets> create iqn.2022-12.io.cloud-atlas.iscsi-gw:iscsi-igw

ok

创建iSCSI网关,这里IP地址是用于读写命令的,可以和

trusted_ip_list一致,不过建议使用独立IP:

/iscsi-target...-igw/gateways> create a-b-data-2 192.168.8.205 skipchecks=true

The first gateway defined must be the local machine

/iscsi-target...-igw/gateways> create a-b-data-2.dev.cloud-atlas.io 192.168.8.205 skipchecks=true

OS version/package checks have been bypassed

Adding gateway, sync'ing 0 disk(s) and 0 client(s)

ok

/iscsi-target...-igw/gateways> create a-b-data-3.dev.cloud-atlas.io 192.168.8.206 skipchecks=true

OS version/package checks have been bypassed

Adding gateway, sync'ing 0 disk(s) and 0 client(s)

ok

备注

请注意我第一次执行添加命令 create a-b-data-2 192.168.8.205 skipchecks=true 提示错误:

The first gateway defined must be the local machine

参考 Bug 1979449 - iSCSI: Getting the error "The first gateway defined must be the local machine" while adding gateways 原因是 ceph-iscsi 使用FQDN来验证主机名是否匹配。所以我第二次改为完整域名就能够添加gateway。

备注

对于非 RHEL/CentOS 操作系统或者使用上游开源ceph-iscsi代码或者使用ceph-iscsi-test内核,则需要使用 skipchecks=true 参数,这样可以避免校验Red Hat内核和检查rpm包。

(已改进采用 libvirt-pool 存储池) 添加RBD镜像,位于 rbd ,大小就采用Ceph整个Bluestore磁盘大小(因为我磁盘空间有限,所以整个分配给虚拟机使用), 即名为 vm_images 大小 55G

采用

libvirt-pool作为RBD存储池:

/iscsi-target...-igw/gateways> cd /disks

/disks> create pool=rbd image=vm_images size=55G

ok

备注

回过头看,之前文档中提到创建Ceph存储池命名为 rbd ,我感觉实际命名可以采用其他名字。考虑到我之前创建过 livirt-pool 存储池,所以我这里改为创建在存储池 libvirt-pool 下。

磁盘创建的规格可以超过实际物理磁盘容量,例如我的OSD磁盘只有46.6G(物理磁盘分区),但是创建 rbd image 可以创建上百G甚至更多。实际使用时才会消耗磁盘空间,具体可以等逻辑磁盘写满就可以动态添加底层物理磁盘扩容。

警告

我仔细阅读了 libvirt storage: iSCSI pool 文档,发现需要注意:

Libvirt虚拟机管理器 不支持直接操作 iSCSI target ,只能使用已经创建好的 iSCSI target LUNs

我这里直接创建一个巨大的 (汗,公司配备的 Apple ARM架构芯片M1 Pro 硬盘实在太小了,双系统启动加上Ceph需要三副本,导致实际可用磁盘只有区区46.6GB)方法不合适,应该为每个虚拟机分别创建一个LUN磁盘,这样我可以部署 Kubernetes (采用5台虚拟机)

我在下文将纠正这个配置错误,重建5个较小的LUNs用于构建虚拟机。

创建一个 initator 名为

iqn.1989-06.io.cloud-atlas:libvirt-client客户端,并且配置intiator的CHAP名字和密码,然后再把创建的RBD镜像磁盘添加给用户:

/disks> cd /iscsi-targets/iqn.2022-12.io.cloud-atlas.iscsi-gw:iscsi-igw/hosts

/iscsi-target...csi-igw/hosts> create iqn.1989-06.io.cloud-atlas:libvirt-client

ok

/iscsi-target...ibvirt-client> auth username=libvirtd password=mypassword12

ok

/iscsi-target...ibvirt-client> disk add libvirt-pool/vm_disk

ok

# 如果需要删除,则使用

cd /disks

delete rbd/vm_images

# 但是会报错: Unable to delete rbd/vm_images. Mapped to: iqn.2022-12.io.cloud-atlas.iscsi-gw:iscsi-igw

# 所以要先进入target移除磁盘映射

cd /iscsi-targets/iqn.2022-12.io.cloud-atlas.iscsi-gw:iscsi-igw/disks

delete rbd/vm_images

# 然后再次返回磁盘进行删除

cd /disks

delete rbd/vm_images

备注

这里的CHAP username和password必须配置,否则target会拒绝任何登陆

username必须是8个字符或以上; password必须是12个字符或以上

添加iSCSI网关到Ceph管理Dashboard

配置好 Ceph iSCSI网关之后,在Dashboard上还是看不到新的网关,此时需要通过:

ceph dashboard iscsi-gateway-list

# Gateway URL format for a new gateway: <scheme>://<username>:<password>@<host>[:port]

ceph dashboard iscsi-gateway-add -i <file-containing-gateway-url> [<gateway_name>]

ceph dashboard iscsi-gateway-rm <gateway_name>

举例,创建一个 iscsi-gw 配置文件内容就是(按照 /etc/ceph/iscsi-gateway.cfg ):

http://admin:mypassword@192.168.8.205:5001

然后执行以下命令添加iSCSI网关管理配置到Dashboard:

ceph dashboard iscsi-gateway-add -i iscsi-gw iscsi-gw-1

这里有一个问题,就是我错误配置了iSCSI GW的IP地址,但是我发现添加以后无法删除,因为删除时候会去访问错误的GW的IP地址,一直超时...

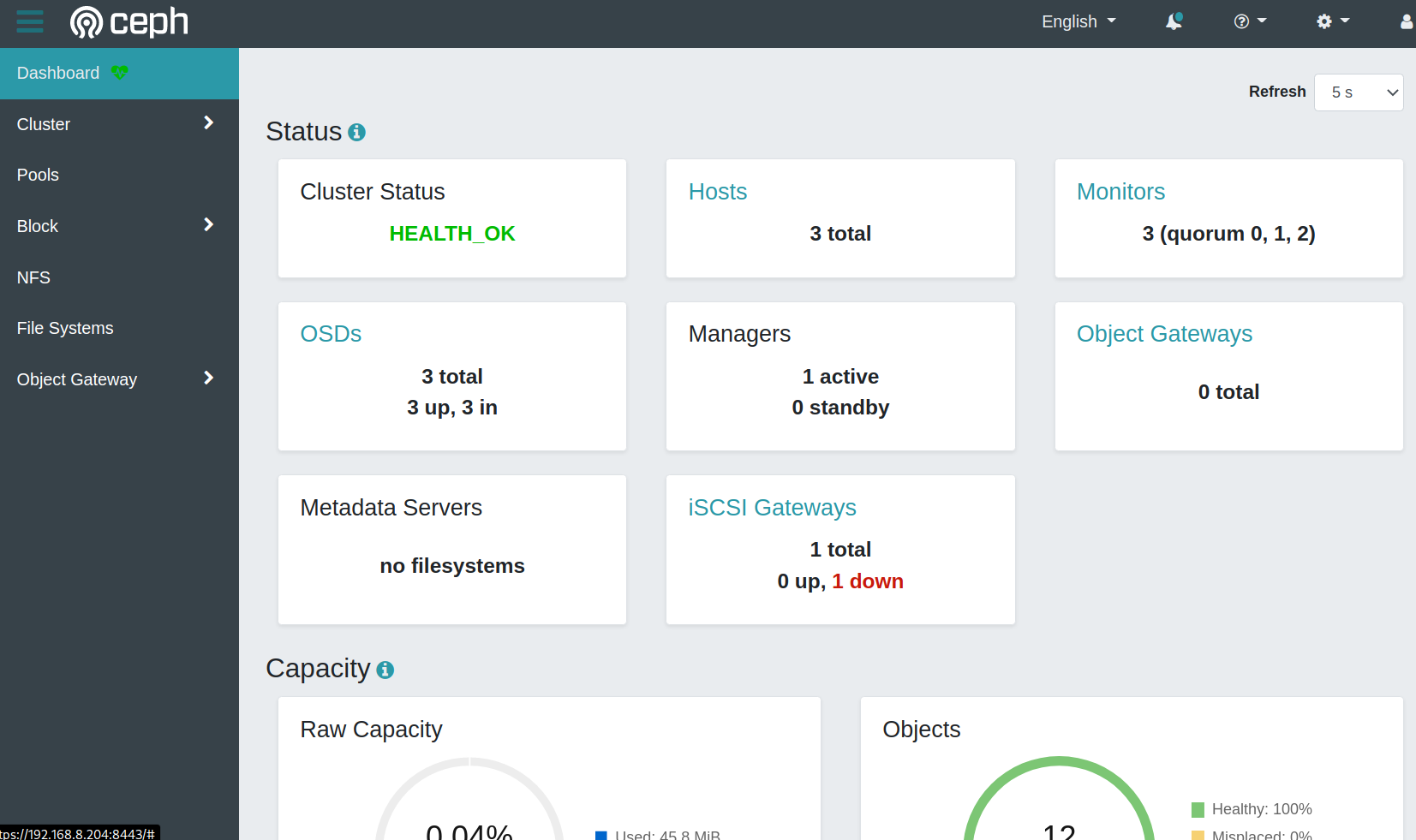

而且,这个错误配置iSCSI Gateway的问题还导致Dashboard首页面空白(应该是后台在等待iSCSI Gateway返回),点击 Block >> iSCSI 菜单还出现500错误。

不过,等待一段时间(具体未知), ceph 终于感知到 iSCSI Gateways 有Down,Dashboard首页面就能够正常刷新出

此时再次执行删除:

ceph dashboard iscsi-gateway-rm iscsi-gw-1

则可以成功。然后修正 iscsi-gw 配置文件,重新添加iSCSI网关:

ceph dashboard iscsi-gateway-add -i iscsi-gw iscsi-gw-1

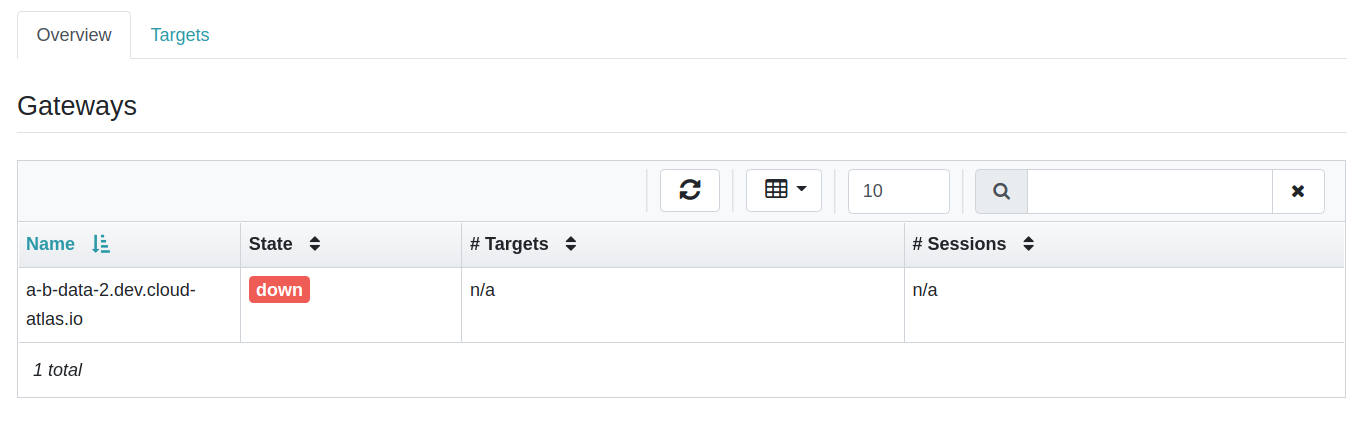

这次添加成功后发现,在 Gateways 中列出的 Name 不是 iscsi-gw-1 ,而是Gateway的主机名,并且发现是down状态:

/etc/ceph/iscsi-gateway.cfg 中配置API认证账号必须同时配置 trusted_ip_list 允许 ceph-mgr 所在的IP地址,否则也显示down机状态

此时删除不能使用 iscsi-gw-1 会提示不存在:

# ceph dashboard iscsi-gateway-rm iscsi-gw-1

Error ENOENT: iSCSI gateway 'iscsi-gw-1' does not exist

而是采用网关名 a-b-data-2.dev.cloud-atlas.io 做删除:

# ceph dashboard iscsi-gateway-rm a-b-data-2.dev.cloud-atlas.io

Success

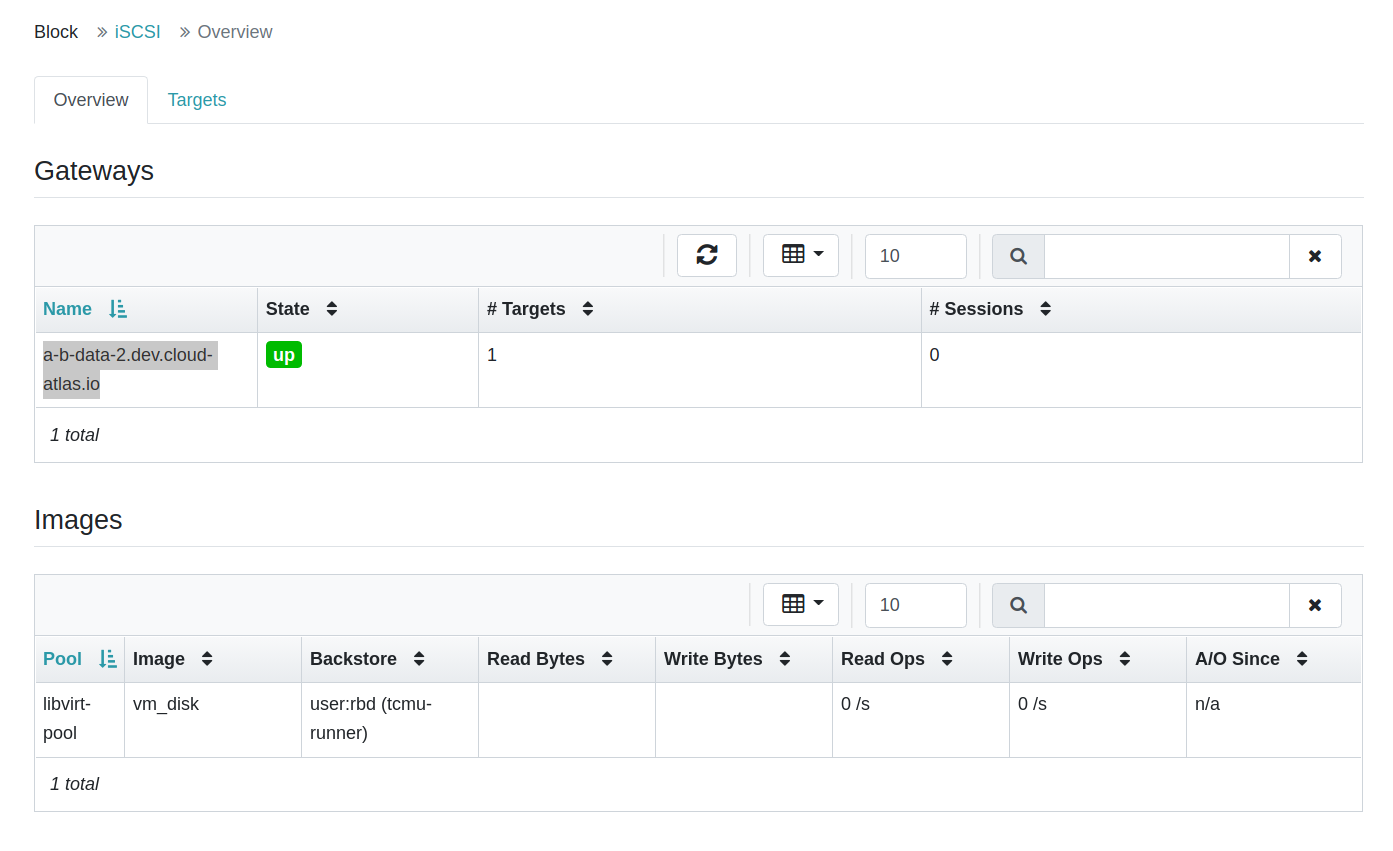

经过对比发现,原来 /etc/ceph/iscsi-gateway.cfg 配置API认证,但是没有配置 trusted_ip_list 也会导致 ceph-mgr 无法访问 rbd-target-api ,所以我调整 /etc/ceph/iscsi-gateway.cfg 添加允许访问IP列表后重启 rbd-target-gw 和 rbd-target-api ,然后重新添加网关,观察 iSCSI gateways 就可以看到节点 a-b-data-2.dev.cloud-atlas.io 状态恢复 UP:

/etc/ceph/iscsi-gateway.cfg 添加 trusted_ip_list 允许 ceph-mgr 所在的IP地址,恢复UP

重复上述步骤,在 ${HOST_2} 主机,即 a-b-data-3 上部署 iSCSI target网关,修改 iscsi-gw 配置成 a-b-data-3 主机IP,然后通过以下命令添加:

ceph dashboard iscsi-gateway-add -i iscsi-gw iscsi-gw-2

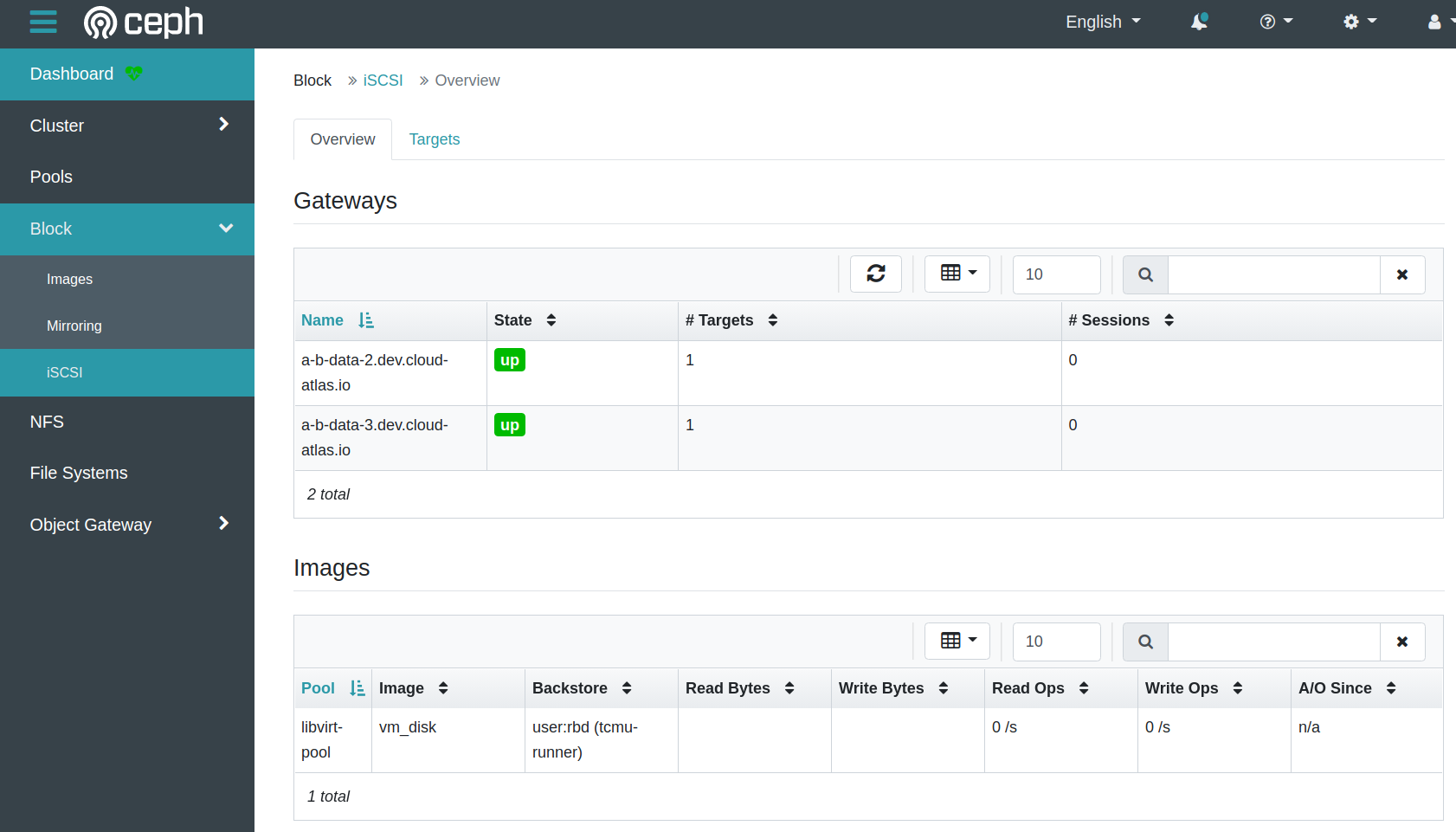

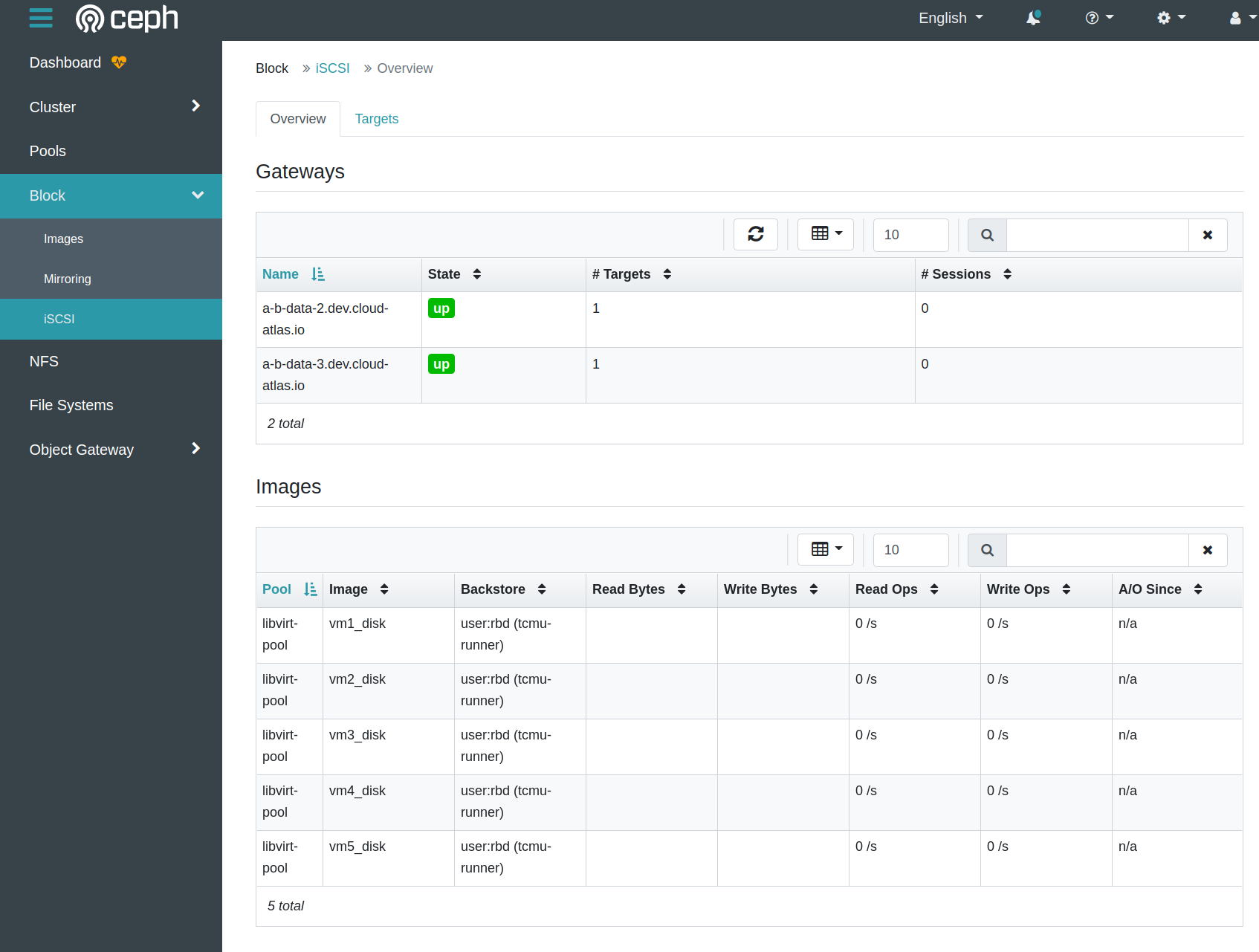

最终完成后可以看到Ceph集群拥有了2个 iSCSI Gateway,并且都有 libvirt-pool 存储池的 vm_disk 镜像访问,如下:

配置2个iSCSI Gateway,提供镜像映射访问

接下来 Ceph iSCSI initator客户端 就可以访问存储

创建iSCSI target LUNs

如上文所述,需要在 iSCSI target 中为每个虚拟机单独构建一个LUN作为虚拟磁盘,所以我按照以下步骤操作

已经完成了 移动云计算Libvirt集成Ceph iSCSI 配置,启动了

images_iscsi存储池。此时livirtd已经登陆到iSCSI target,能够查询LUNs:

virsh vol-list images_iscsi

此时看到是一个错误创建过大的LUN:

Name Path

---------------------------------------------------------------------------------------------------------------------------------

unit:0:0:0 /dev/disk/by-path/ip-a-b-data-2.dev.cloud-atlas.io:3260-iscsi-iqn.2022-12.io.cloud-atlas.iscsi-gw:iscsi-igw-lun-0

在Ceph服务器iSCSI Gateway网关节点上执行以下命令,删除掉这个LUN(注意,需要先移除

iscsi-targets节点上的磁盘映射):

# gwcli

> cd /iscsi-targets/

/iscsi-targets> ls

> cd /iscsi-targets/

/iscsi-targets> ls

o- iscsi-targets ................................................................... [DiscoveryAuth: None, Targets: 1]

o- iqn.2022-12.io.cloud-atlas.iscsi-gw:iscsi-igw ......................................... [Auth: None, Gateways: 2]

o- disks .............................................................................................. [Disks: 1]

| o- libvirt-pool/vm_disk ......................................... [Owner: a-b-data-2.dev.cloud-atlas.io, Lun: 0]

o- gateways ................................................................................ [Up: 2/2, Portals: 2]

| o- a-b-data-2.dev.cloud-atlas.io .......................................................... [192.168.8.205 (UP)]

| o- a-b-data-3.dev.cloud-atlas.io .......................................................... [192.168.8.206 (UP)]

o- host-groups ...................................................................................... [Groups : 0]

o- hosts ........................................................................... [Auth: ACL_ENABLED, Hosts: 1]

o- iqn.1989-06.io.cloud-atlas:libvirt-client ............................ [LOGGED-IN, Auth: CHAP, Disks: 1(46G)]

o- lun 0 ................................... [libvirt-pool/vm_disk(46G), Owner: a-b-data-2.dev.cloud-atlas.io]

# 这里删除映射会报错:

/iscsi-target...csi-igw/disks> delete libvirt-pool/vm_disk

Failed - Delete target LUN mapping failed - failed on a-b-data-3.dev.cloud-atlas.io. Failed to remove the LUN - Unable to delete libvirt-pool/vm_disk - allocated to iqn.1989-06.io.cloud-atlas:libvirt-client

# 这是因为已经有 libvirt 客户端启动了iSCSI存储池,也就是已经登陆到iSCSI target并占用了磁盘。所以需要先退出客户端执行:

# virsh pool-destroy images_iscsi (停止)

# 此时再次 ls 可以看到已经没有 LOGGED-IN 标识了

/iscsi-target...ibvirt-client> ls

o- iqn.1989-06.io.cloud-atlas:libvirt-client ............................................. [Auth: CHAP, Disks: 1(46G)]

o- lun 0 ......................................... [libvirt-pool/vm_disk(46G), Owner: a-b-data-2.dev.cloud-atlas.io]

# 此时就可以删除掉这个分配给 iqn.1989-06.io.cloud-atlas:libvirt-client 的磁盘 libvirt-pool/vm_disk

/iscsi-target...ibvirt-client> disk remove libvirt-pool/vm_disk

ok

# 接下来是删除target的映射:

/iscsi-target...ibvirt-client> cd /iscsi-targets/iqn.2022-12.io.cloud-atlas.iscsi-gw:iscsi-igw/disks

/iscsi-target...csi-igw/disks> ls

o- disks .................................................................................................. [Disks: 1]

o- libvirt-pool/vm_disk ............................................. [Owner: a-b-data-2.dev.cloud-atlas.io, Lun: 0]

/iscsi-target...csi-igw/disks> delete libvirt-pool/vm_disk

ok

# 最后才是实际删除RBD磁盘:

/iscsi-target...csi-igw/disks> cd /disks

/disks> ls

o- disks ............................................................................................. [46G, Disks: 1]

o- libvirt-pool ............................................................................... [libvirt-pool (46G)]

o- vm_disk .................................................................. [libvirt-pool/vm_disk (Online, 46G)]

/disks> delete libvirt-pool/vm_disk

ok

接下来开始创建5块RBD磁盘,并映射到iSCSI starget:

# 创建RBD磁盘,共5个

/disks> cd /disks

/disks> ls

o- disks ........................................................................................... [0.00Y, Disks: 0]

/disks> create pool=libvirt-pool image=vm1_disk size=10G

ok

/disks> ls

o- disks ............................................................................................. [10G, Disks: 1]

o- libvirt-pool ............................................................................... [libvirt-pool (10G)]

o- vm1_disk ............................................................... [libvirt-pool/vm1_disk (Unknown, 10G)]

/disks> create pool=libvirt-pool image=vm2_disk size=9G

ok

/disks> create pool=libvirt-pool image=vm3_disk size=9G

ok

/disks> create pool=libvirt-pool image=vm4_disk size=9G

ok

/disks> create pool=libvirt-pool image=vm5_disk size=9G

ok

/disks> ls

o- disks ............................................................................................. [46G, Disks: 5]

o- libvirt-pool ............................................................................... [libvirt-pool (46G)]

o- vm1_disk ............................................................... [libvirt-pool/vm1_disk (Unknown, 10G)]

o- vm2_disk ................................................................ [libvirt-pool/vm2_disk (Unknown, 9G)]

o- vm3_disk ................................................................ [libvirt-pool/vm3_disk (Unknown, 9G)]

o- vm4_disk ................................................................ [libvirt-pool/vm4_disk (Unknown, 9G)]

o- vm5_disk ................................................................ [libvirt-pool/vm5_disk (Unknown, 9G)]

# 在iSCSI网关上添加客户端(该步骤之前做过,这里只是整理,不再重复命令

/disks> cd /iscsi-targets/iqn.2022-12.io.cloud-atlas.iscsi-gw:iscsi-igw/hosts

/iscsi-target...csi-igw/hosts> create iqn.1989-06.io.cloud-atlas:libvirt-client

ok

/iscsi-target...ibvirt-client> auth username=libvirtd password=mypassword12

ok

# 然后给客户端 libvirt-client 依次添加前面创建的5块磁盘,注意: lun 0 到 lun 4

/iscsi-target...ibvirt-client> disk add libvirt-pool/vm1_disk

ok

/iscsi-target...ibvirt-client> disk add libvirt-pool/vm2_disk

ok

/iscsi-target...ibvirt-client> disk add libvirt-pool/vm3_disk

ok

/iscsi-target...ibvirt-client> disk add libvirt-pool/vm4_disk

ok

/iscsi-target...ibvirt-client> disk add libvirt-pool/vm5_disk

ok

/iscsi-target...ibvirt-client> ls

o- iqn.1989-06.io.cloud-atlas:libvirt-client .................................................. [Auth: CHAP, Disks: 5(46G)]

o- lun 0 ............................................. [libvirt-pool/vm1_disk(10G), Owner: a-b-data-2.dev.cloud-atlas.io]

o- lun 1 .............................................. [libvirt-pool/vm2_disk(9G), Owner: a-b-data-3.dev.cloud-atlas.io]

o- lun 2 .............................................. [libvirt-pool/vm3_disk(9G), Owner: a-b-data-2.dev.cloud-atlas.io]

o- lun 3 .............................................. [libvirt-pool/vm4_disk(9G), Owner: a-b-data-3.dev.cloud-atlas.io]

o- lun 4 .............................................. [libvirt-pool/vm5_disk(9G), Owner: a-b-data-2.dev.cloud-atlas.io]

现在,从 Ceph Dashboard 管控面板 上观察,可以看到 iSCSI Gateways 下管理着刚才创建的

libvirt-pool的5块磁盘

通过Ceph Dashboard可以观察到iSCSI target提供了5个映射的libvirt-pool的LUN虚拟磁盘

并且,在远程 移动云计算Libvirt集成Ceph iSCSI 配置完成后,可以使用 virsh 命令检查存储池中的卷:

virsh vol-list images_iscsi

可以看到上述配置的5个iSCSI target LUNs已经可以被libvirt客户端:

Name Path

---------------------------------------------------------------------------------------------------------------------------------

unit:0:0:0 /dev/disk/by-path/ip-a-b-data-2.dev.cloud-atlas.io:3260-iscsi-iqn.2022-12.io.cloud-atlas.iscsi-gw:iscsi-igw-lun-0

unit:0:0:1 /dev/disk/by-path/ip-a-b-data-2.dev.cloud-atlas.io:3260-iscsi-iqn.2022-12.io.cloud-atlas.iscsi-gw:iscsi-igw-lun-1

unit:0:0:2 /dev/disk/by-path/ip-a-b-data-2.dev.cloud-atlas.io:3260-iscsi-iqn.2022-12.io.cloud-atlas.iscsi-gw:iscsi-igw-lun-2

unit:0:0:3 /dev/disk/by-path/ip-a-b-data-2.dev.cloud-atlas.io:3260-iscsi-iqn.2022-12.io.cloud-atlas.iscsi-gw:iscsi-igw-lun-3

unit:0:0:4 /dev/disk/by-path/ip-a-b-data-2.dev.cloud-atlas.io:3260-iscsi-iqn.2022-12.io.cloud-atlas.iscsi-gw:iscsi-igw-lun-4